At Quantum Formalism (QF) Academy, when people tell us they want to “learn higher mathematics,” we often notice the same mistake: they treat it like a weekend project, a book to skim now and then, or a course they can binge and finish.

In this episode, we draw a simple but powerful analogy: learning mathematics is like building physical fitness. Just as no one gets fit by going to the gym a few times, no one masters topics such as topology, functional analysis, or differential geometry by studying in short bursts. Fitness needs a daily routine, steady progress, and long-term commitment, and maths is no different.

We’ll talk about why consistency matters more than intensity, how to set up your learning like a training plan with warm-ups, gradual challenges, and recovery, and why being part of a community makes growth sustainable.

Disclaimer: This is an experimental pod that leverages AI narration with the script written by humans. Of course, the narration occasionally goes off track due to technical nuances and LLM hallucinations. However, most of the time, it gets the technical content right.

Happy rest of the week!

QF Academy team

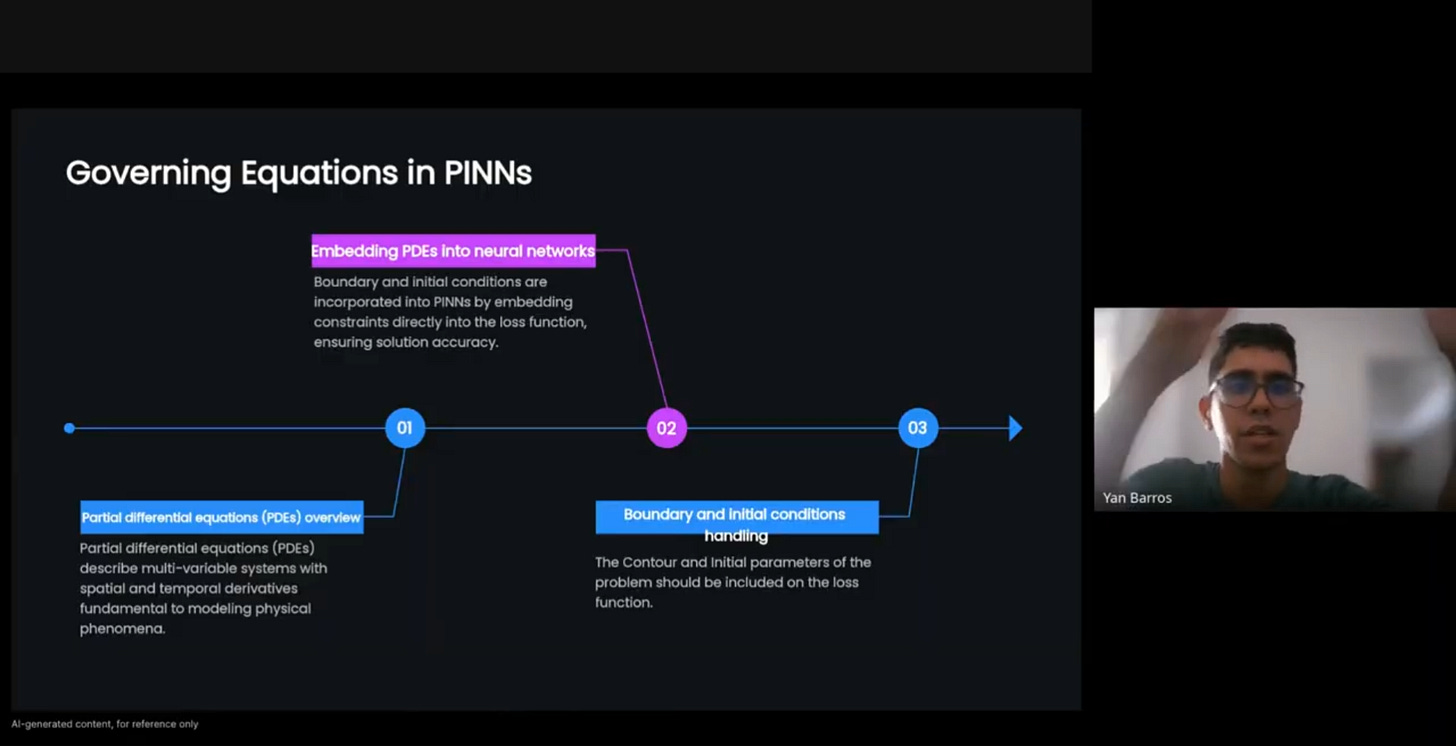

Reminder: Physics Informed Neural Networks (PINNs)

Did you know you can still join the workshop before Friday’s session?

Register today (link below) to unlock the exclusive replay and resources from the first session, available later today, so you’ll be ready for the next one.

Some of the topics discussed in the inaugural session included:

🔧 𝐀𝐮𝐭𝐨𝐦𝐚𝐭𝐢𝐜 𝐬𝐞𝐥𝐞𝐜𝐭𝐢𝐨𝐧 of rates in the PINN loss function for balanced training

🏗️ 𝐁𝐞𝐬𝐭 𝐚𝐫𝐜𝐡𝐢𝐭𝐞𝐜𝐭𝐮𝐫𝐞𝐬 & 𝐨𝐩𝐭𝐢𝐦𝐢𝐬𝐚𝐭𝐢𝐨𝐧 strategies to improve accuracy and convergence

🌍 𝐌𝐮𝐥𝐭𝐢-𝐏𝐡𝐲𝐬𝐢𝐜𝐬 𝐏𝐈𝐍𝐍𝐬 with transfer learning to accelerate cross-domain problem solving

📊 𝐓𝐫𝐚𝐢𝐧𝐢𝐧𝐠 𝐰𝐢𝐭𝐡 𝐝𝐚𝐭𝐚 𝐩𝐨𝐢𝐧𝐭𝐬 & 𝐜𝐨𝐥𝐥𝐨𝐜𝐚𝐭𝐢𝐨𝐧 𝐩𝐨𝐢𝐧𝐭𝐬 for robust solution generalisation

He also shared practical examples using both 𝐏𝐲𝐓𝐨𝐫𝐜𝐡 and 𝐏𝐢𝐧𝐧𝐟𝐚𝐜𝐭𝐨𝐫𝐲, the open source framework he developed to make it easier to create loss functions and train PINNs.

👉 𝐉𝐨𝐢𝐧 𝐨𝐮𝐫 𝐏𝐈𝐍𝐍𝐬 𝐯𝐢𝐫𝐭𝐮𝐚𝐥 𝐰𝐨𝐫𝐤𝐬𝐡𝐨𝐩: https://quantumformalism.academy/pinns-workshop